Best Scraping Browsers for 2025: Top Tools

The world of web scraping has evolved a ton – it’s no longer just writing simple scripts. Modern scraping browsers combine real browser engines with proxy networks and CAPTCHA solvers to tackle even the trickiest sites. In this post, I’ll walk through the top scraping browser tools for 2025, from industry heavyweights to new AI-powered startups. I’ll also explain how headless browsers like Puppeteer, Playwright, and Selenium fit into the mix. And yes, I’ll share some pro tips and my own “aha!” moments along the way.

What Is a Scraping Browser?

A scraping browser is basically a specialized browser or browser-like service built for web data extraction. Instead of just sending HTTP requests, it actually renders pages (often invisibly) and behaves like a real user. This means it can handle JavaScript-heavy pages, auto-rotate your IP address, solve CAPTCHAs, and bypass bot defenses – all under the hood. Think of it like a cloud-based Chrome or Firefox that’s been supercharged for scraping.

When I first tried scraping job listings from a popular site, I didn’t realize the page was loaded via JavaScript. My simple Python script got back an empty HTML! Only after switching to a headless browser (that runs Chrome without a GUI) did I get the content. That’s the power of a scraping browser: it mimics what you’d see in a normal browser, just faster and automated.

Alt text: Close-up view of HTML source code on a computer screen, highlighting how web data appears in a browser (screenshot of HTML lists and links).

Pro Tip: Always start with a real browser to inspect a site’s HTML (or use a headless mode) before coding. That way you know if the content is really there or needs rendering.

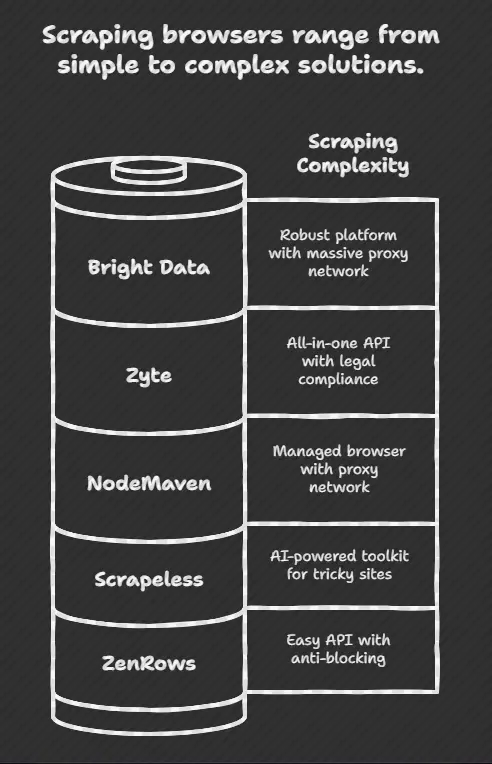

Best Scraping Browsers 2025

In this post, I’m walking you through five of the most popular scraping browsers and tools in 2025. I’ve tested and tinkered with each one (sometimes to the point of pulling my hair out), so I’ll give you the real talk—what’s great, what’s not, and who each one is best for.

what worked for me: I started with a free tool, broke a few sites (oops), then slowly worked my way up to the more advanced stuff. Don’t stress if it all feels a bit overwhelming at first—you’ll get the hang of it fast once you find the right tool.

Alright, ready to dive into the tools that can save you hours of grunt work? Let’s go!

Bright Data (Browser API)

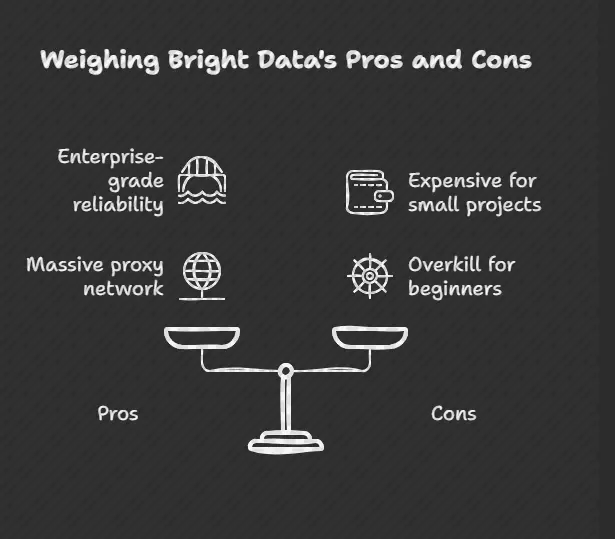

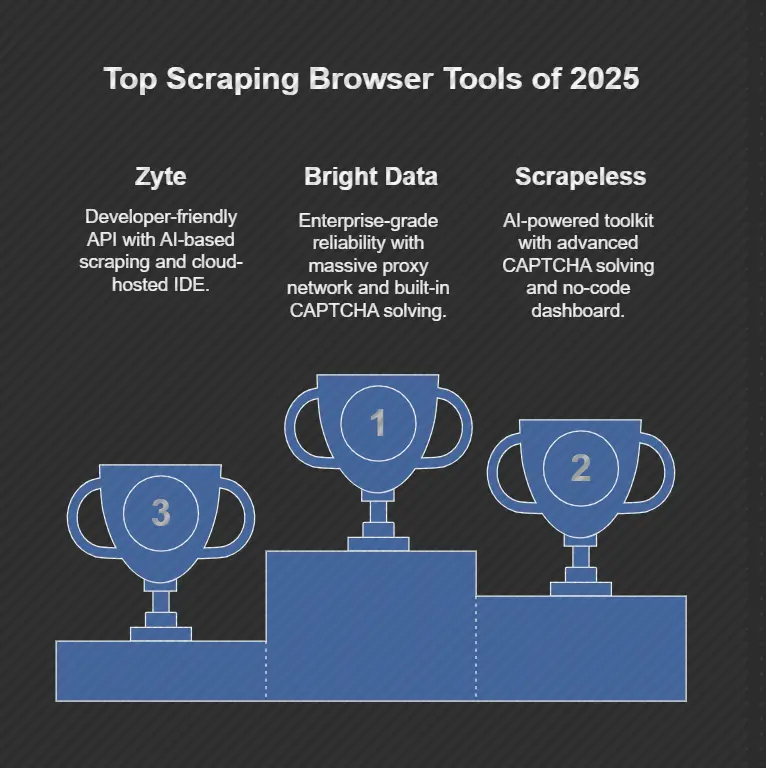

Bright Data (formerly Luminati) has one of the most robust scraping platforms. Their Browser API is a fully hosted solution where you run your Puppeteer, Playwright, or Selenium scripts on Bright Data’s cloud. In practice, it spins up real Chrome/Chromium instances for you, complete with built-in CAPTCHA solving and automated proxy management. Bright Data’s claims – it can “simplify your dynamic scraping operations” and supports all major automation libraries.

- Pros: Enterprise-grade reliability. Massive proxy network (150M+ IPs) under the hood. Built-in tools for browser fingerprinting, geotargeting, referrers, and cookies. Supports unlimited concurrency so you can scale with volume. You just add one line of code to connect to their browser service (as documented).

- Cons: Expensive for small projects. Bright Data tends to charge by gigabyte of traffic (starting around ~$8/GB). For casual users or hobby projects, that can add up quickly. It’s also a lot of features – the dashboard and pricing feel tailored for businesses. Beginners might find it overkill (and pricey) unless you really need that scale.

Unique feature: Bright Data’s “playground” allows you to test scripts in-browser via a code editor (similar to an online IDE). This can help debug your scraping code with real-time logs and visual feedback.

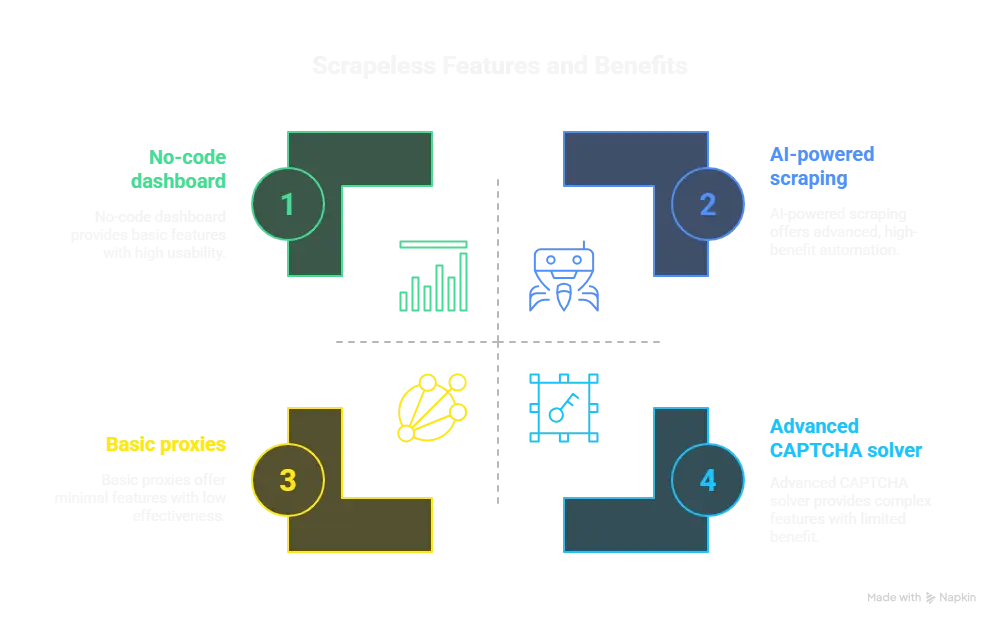

Scrapeless

Scrapeless is a newcomer (launched Jan 2025) that’s already making waves. It brands itself as an AI-powered scraping toolkit. The idea is that it offers a suite of tools – a Scraping Browser, Scraping API, Web Unlocker (for anti-bot), CAPTCHA Solver, proxies, and even an AI Agent for optimizing your scrape. The focus is on using AI and automation to tackle complex sites quickly. For example, their Scraping Browser “mimics real browser behavior” to extract dynamic content without tripping anti-bot protections.

- Pros: Modern, all-in-one approach. AI and RPA (robotic process automation) help it adapt to tricky sites. Includes advanced CAPTCHA solving (reportedly handles ReCaptcha, Cloudflare Turnstiles, etc. out of the box). No-code dashboard lets you run or schedule scrapes. Built-in data cleaning and analysis is on the roadmap. Pricing starts relatively low (plans ~ $49+/month) with a free trial.

- Cons: Newer service, so it hasn’t been battle-tested like the older players. If something goes wrong, support might still be growing. Also, focusing on AI and analysis means it’s more business-oriented – it might feel heavyweight if all you want is raw data. Some features (like historical storage and analytics) are still being added.

Unique feature: Scrapeless emphasizes the AI Agent that can recommend scraping strategies and even clean your data for you. It’s one of the first tools to integrate AI/ML into the scraping workflow itself.

Zyte (Scraping APIs and Services)

Zyte (formerly Scrapinghub) is a veteran in web data. Rather than a “browser” product per se, Zyte offers cloud scraping services like the Zyte API and Scrapy Cloud hosting. In 2025 they highlight an All-in-One Scraping API that handles proxy rotation and blocking. In fact, Zyte’s API manages proxy assignments, cookies, and even screenshots of pages for you. You send a single API call and they guarantee high success rates (they claim 99.9% uptime and built-in compliance).

- Pros: Developer-friendly and scalable. Zyte’s API abstracts almost everything – it auto-rotates IPs, handles login sequences, and returns structured JSON for any site. Features like AI-based scraping and a cloud-hosted IDE help build complex scrapers without managing infrastructure. Zyte also has strong legal compliance (they emphasize “patented AI with human oversight”), which is good if you’re cautious about terms of service.

- Cons: Less transparent than DIY tools. Because Zyte is a managed service, you’re somewhat in a black box. Advanced users who like full control might find it limiting. Also, costs can escalate: simple tasks are cheap, but JavaScript-heavy or large-scale scrapes cost more. Their pricing is usage-based and not fully public (you often need a quote), which can make budgeting tricky.

Unique feature: Zyte offers a TypeScript API and even a cloud-hosted VS Code environment, so you can write custom scraping logic (clicking, scrolling, etc.) right in your browser. This hybrid between a visual tool and full-code is fairly unique.

ZenRows

ZenRows markets itself as “the best web scraping toolkit”. It’s essentially a simple REST API with anti-blocking built in. Key features are auto-rotating proxies (residential IPs), headless browser rendering, CAPTCHA solving, and bypassing advanced anti-bot shields. You call the API, and ZenRows fetches the page for you, even if the site uses Cloudflare or heavy JS.

- Pros: Extremely easy to use. No setup – just sign up and hit the API endpoint. They even have a free tier (1,000 URLs) to try. ZenRows takes care of most issues for you (you don’t need to write any browser automation code yourself). Pricing is subscription-based from about $69/month (with an extra $0.09/hr for actual scraping time). Good for small teams or developers who want quick results.

- Cons: Less control. Since it’s a black-box API, you can’t tweak much aside from query parameters. Pricing adds up if you need heavy usage hours beyond the free quota. Also, compared to full scraping browser tools, ZenRows may not handle very exotic use-cases (its CAPTCHA solving is decent but not as advanced as specialized tools).

Unique feature: ZenRows offers a Screenshot API as part of their service – you can retrieve a PNG of the fully rendered page easily. This is handy if you need visual proof of data or want to parse images later.

NodeMaven

NodeMaven started as a proxy provider and now offers a Scraping Browser product. It’s essentially a managed browser environment (supporting Puppeteer, Playwright, and Selenium) combined with NodeMaven’s proxy network. Their marketing calls it an “anti-detect browser” that tackles the hardest anti-bot systems. Under the hood, it spins up real Chrome instances on demand and auto-rotates through residential/mobile proxies for maximum stealth.

- Pros: Great for developers who already use Puppeteer/Playwright. You can drop in your existing scripts and just point them at NodeMaven’s browser endpoint. They emphasize auto-scaling, so your jobs can run concurrently without you managing servers. The interface allows live debugging through Chrome DevTools, which is a nice developer-friendly touch. It also promises 24/7 support and straightforward pay-as-you-go pricing (free trial includes 500MB of browsing).

- Cons: Still a niche player. NodeMaven is less well-known than Bright Data or Zyte, so the community and third-party tutorials are smaller. It mostly bundles proxies + browsers, so its feature set is narrower (for example, I didn’t see custom AI or data analysis features). If your needs outgrow proxies/browser (like heavy data pipelines), you might need to integrate other services.

Unique feature: NodeMaven’s focus on customization and real-time debugging. For example, they give you direct CDP (Chrome DevTools Protocol) access so you can tweak headers or inspect a session live. This is especially handy if you’re a hands-on developer who likes to see exactly what the browser is doing.

Best Scraping Browsers & Headless Tools – 2025 Comparison Chart

| Tool | Type | Best For | Key Features | Pricing (Starts At) | CAPTCHA Solving | Headless Engine(s) | Ease of Use | Notes / Unique Strength |

|---|---|---|---|---|---|---|---|---|

| Bright Data | Scraping Browser (Cloud) | Large-scale enterprise scraping | 195+ locations, CAPTCHA solver, proxy management, browser fingerprinting, playground IDE | $8.4/GB or $499/month | ✅ Advanced (built-in) | Puppeteer, Playwright, Selenium | ⭐⭐⭐⭐ | Excellent for high volume + advanced targeting |

| Scrapeless | Scraping Browser (Cloud) | Devs and analysts needing full automation | CAPTCHA bypass (4 types), dynamic content handling, CDP API access, AI Agent suggestions | $49/month | ✅ Advanced (configurable) | Puppeteer, Playwright | ⭐⭐⭐⭐ | Ideal mix of ease + power, great AI-based automation |

| Zyte | Scraping API (Cloud) | Versatile web scraping with less setup | Auto proxy rotation, TypeScript API, VS Code in cloud, screenshot + geolocation support | Custom / Usage-Based | ✅ Yes (with paid tier) | Browser engine (abstracted) | ⭐⭐⭐⭐ | Code + no-code hybrid, highly developer-friendly |

| ZenRows | Scraping Browser API | Simple, fast scraping with minimal setup | Residential IPs, JavaScript rendering, screenshot API, 190+ geo IPs | $69/month + $0.09/hr | 🚫 Limited | Headless browser (abstracted) | ⭐⭐⭐ | Great entry point for quick scraping, limited CAPTCHA support |

| NodeMaven | Scraping Browser + Proxies | Custom workflows with live debugging | Real-time CDP debugging, header/cookie control, auto-scaling concurrency, included with proxy plan | Free w/ proxy plan | ✅ Yes (with proxy setup) | Puppeteer, Playwright | ⭐⭐⭐⭐ | Best for devs wanting control + debugging power |

| Puppeteer | Headless Browser Library | JavaScript-heavy site scraping | Full JS support, Chromium-only, high speed, supports screenshots & PDF rendering | Free (open-source) | 🚫 Requires 3rd party | Chromium | ⭐⭐⭐ | Best for Node.js/JS users, easy syntax |

| Playwright | Headless Browser Library | Cross-browser scraping (Chrome, Firefox) | Auto wait, built-in mobile emulation, cross-platform/browser support | Free (open-source) | 🚫 Requires 3rd party | Chromium, Firefox, WebKit | ⭐⭐⭐⭐ | Ideal for multi-browser needs |

| Selenium | Headless Browser Library | Complex form-based scraping | Supports many languages (Python, Java, C#), full interaction control, large community | Free (open-source) | 🚫 Requires 3rd party | Chrome, Firefox, Edge | ⭐⭐⭐ | Most flexible but slower + heavier setup |

| AdsPower | Headless Fingerprint Browser | Anti-detect scraping, multi-account tasks | Headless + fingerprint masking, works for stealth browsing, CLI/API start | Custom plans | ✅ Yes (in stealth mode) | Chromium (with GUI/headless) | ⭐⭐⭐ | Best for avoiding bot detection during scraping |

Headless Browsers and Scraping

A quick side note: tools like Puppeteer, Playwright, and Selenium are the engines that power many scraping browsers. They are called “headless” because they can run browsers without any GUI. In fact, a headless browser is literally just a regular browser (like Chrome or Firefox) running invisibly.

- Puppeteer is a Node.js library for controlling Chrome/Chromium. It’s super fast for scraping JavaScript-heavy sites.

- Playwright is similar but supports Chromium, Firefox, and Safari (WebKit) – great for cross-browser tasks.

- Selenium WebDriver works with many languages (Python, Java, etc.) and many browsers.

All three can launch a browser, navigate pages, and extract data. A key advantage of headless mode is speed: since there’s no visual rendering, scraping can be a bit faster. For example, ZenRows’ blog points out that skipping image/CSS rendering can shave seconds off each page load.

However, scraping browsers (like the products we just discussed) often wrap headless engines inside a service. Bright Data’s Browser API, for instance, essentially runs Puppeteer/Selenium for you. NodeMaven supports attaching to these tools as well. The difference is who manages the browser: in a DIY setup you install Puppeteer on your server; with a scraping browser, the company handles the infrastructure.

Pro Tip: Puppeteer vs Selenium? Use Puppeteer or Playwright when speed and ease are priorities (especially if you code in JavaScript). Choose Selenium if you need multi-language support or complex browser scenarios. But remember – headless automation is only part of the puzzle. To really beat blocks, pair it with rotating proxies and CAPTCHA solving (either built-in or via tools like 2Captcha).

Tips and Takeaways

- Start simple, then scale up. I often begin with something like Google Sheets import or Python’s

requeststo see if a site’s data is available. When that fails (dynamic content, blocks, etc.), I graduate to a scraping browser service. - Respect target sites. Even when using proxies, throttle your requests and obey

robots.txt. Many scraping browsers have built-in rate limiting and retries (for example, Bright Data does “automatic retries and IP rotation”). Use those features so you don’t hammer a site. - Test the free tier or trial. Nearly all these tools have some free credits or trials. I’d run a few sample scrapes (like pulling prices from Amazon or listings from Indeed) to see how each handles real obstacles.

- Pro Tip: Keep an eye on cookies and sessions. One trick is to capture cookies from a normal browser session on the target site and reuse them in your scraper if you hit a block. Many scraping browsers let you manage cookies via their API (Bright Data, Zyte, NodeMaven all do this).

To wrap up: each of these tools can help you scrape smarter, but the “best” one depends on your needs. For large scale or enterprise projects, Bright Data’s feature set and uptime might be worth the cost. For a quick dev-friendly solution, ZenRows or Scrapeless could save a lot of setup time. Zyte is great if you want a fully managed API with legal compliance baked in. NodeMaven is a solid choice if you already use proxies heavily and want an easy Puppeteer/Playwright option.

No matter which you pick, remember that headless browsers are just tools – the key is combining them with good proxy practices and error handling. Happy scraping! Read Also Best Antidetect Browsers